As your applications grow and become more complex with multi-cloud infrastructures, it's easy to feel a sense of panic about the risks to your environment. As your list of worries grows, you must ensure your systems follow policies and regulatory standards, are optimized for your workloads, and reporting gives you the insights you need to take action on any problems.

Chef Compliance Automation (Chef InSpec, Infra, Workstation and Automate) provides an easy-to-understand and customizable code framework that does the job for you. With as little as a single Chef Cookbook and Recipe, you can achieve your goals -- even when dealing with different cloud architectures.

In this blog post, you'll get a taste of using Chef Compliance Automation to configure and verify AWS, Azure, and Google Cloud Platform instances using some basic recipes and profiles.

Before proceeding, you may want to look at our previous blog post that provides an overview of implementing InSpec in a single cloud.

Setting up your Environment

Regardless of whether you're working on a Windows, macOS or Linux workstation, the best way to get started is to install Chef Workstation, a collection of tools that enable you to create, test and run Chef code. Refer to Chef Infra 101: The Road to Best Practices guide to complete the steps to configure a workstation with Chef Infra Server, which stores your code.

At a minimum, copy your Chef Infra Server user.pem key to your workstation and run the following command to configure your Chef Workstation environment:

$ knife configure init-config

The next steps presume you have access to public cloud services. Create at least one raw instance on AWS, Azure or GCP. These will be your target nodes, which you'll configure and audit using Chef.

Communication between your system and these cloud services requires you to have created Identity and Access Management (IAM) credentials on each. Download those .crt or .pem keys to your workstation and place them in your $HOME/.ssh directory.

Bootstrap AWS and Azure and GCP nodes

Bootstrapping is the process of making Chef aware of nodes you want to manage. During the process, the Chef Client agent is installed on your target nodes and secure configurations that match your environment are placed on each machine. These establish communication between your workstation, your target nodes and your Chef Infra Server, which stores and tracks the code you apply. The Chef Client does the work of applying configurations and reporting each node's state.

Bootstrap AWS and Azure nodes

Bootstrapping is done using knife, one of the tools provided when you installed Chef Workstation. The command is something like the following example:

$ knife bootstrap 54.161.162.68 -N <node-name> -U <username> --sudo -i ~/.ssh/iam-key-file.pem

Where:

54.161.162.68– The public IP of your cloud instance-N node-name– The name you want to assign to the node, such as aws-web01 or azure-redhat-web45. The name will be how Chef sees the node.username– The username for the cloud instance, such as ec2-user or ubuntuiam-key-file.pem– The IAM key file associated with your cloud account

Bootstrap a Google Cloud Platform node

You can generate your own keys to manage GCP authentication via SSH. For example, on a macOS or Linux system:

$ ssh-keygen -t rsa -f $HOME/.ssh/mygcp_rsa

You need to add your public SSH key to your Google project metadata to access all VMs in a project.

Copy the public key and paste it in “Metadata -> SSH keys section” and save the file. You can now SSH to your GCP VM using your private key.

$ ssh -i <private_key_file_name> <Username of your created instance>@<External IP>

Example:

$ ssh -i linuxgcp akshay9@34.125.89.53

With that key created, you can bootstrap your GCP node:

$ knife bootstrap 34.125.198.163 -N gcplinux10 -U system-user-name --sudo -i ~/.ssh/mygcp_rsa

Where:

34.125.198.163– The external IP of the GCP instanceyour-user-name– Username of your created instancemygcp_rsa– Generated SSH key file

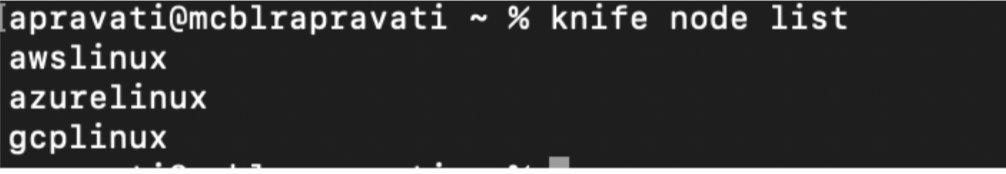

You can verify that all your cloud nodes have been successfully bootstrapped by running $ knife node list:

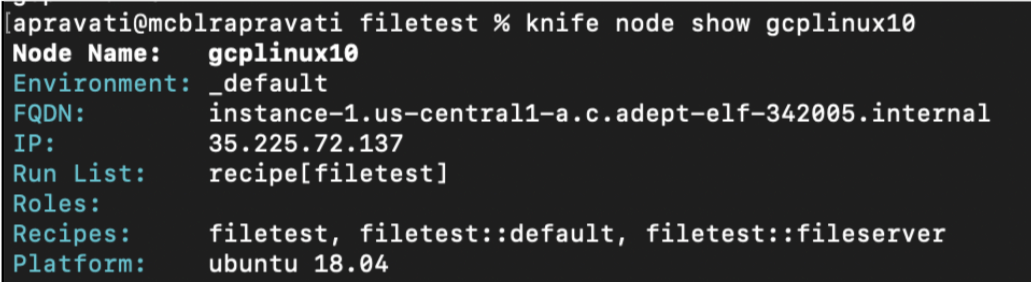

You can get details of a node by using knife node show <node-name>

Recipe Example

With Chef, configuration management is handled by cookbooks and recipes, which define what you want to be done. The following example installs and runs a different server based on each different cloud environment. You can use a Chef generator to create

the cookbook and edit the default.rb recipe that's created:

$ cd $HOME/chef-repo/cookbooks

$ chef generate cookbook file-example-cookbook

$ cd ./chef-repo/cookbooks/file-example-cookbook/recipes/

Edit default.rb and add the following. Notice that this example uses logic so you can use the same recipe on AWS, Azure or GCP instances and on different OS versions at the same time:

# Cookbook:: file-example-cookbook

# Recipe:: default

#

# Copyright:: 2022, Your Name, All Rights Reserved.

include_profile file-example-cookbook::fileserver'

if ec2?

apt_update 'update' if platform_family?('debian')

package 'nginx' do

action :install

end

service 'nginx' do

action

[:enable, :restart]

end

elsif gce?

package 'apache2' do

action :install

end

service 'apache2' do

action [:enable, :restart]

end

elsif azure?

package 'lighttpd' do

action :install

end

service 'lighttpd' do

action [:enable, :restart]

end

end

As a best practice always perform syntax checks on your recipes and profiles with Cookstyle:

$ cookstyle -a

When your recipes are error-free, you can upload them to the Chef Infra Server using $ knife cookbook upload <cookbook-name>, but the more modern way is to use Policyfiles. Policyfiles bundle multiple cookbooks and included profiles

into immutable artifacts that can be assigned using Policy Names and Policy Groups. Either approach will upload your cookbook, including the recipe files, and make them available for your nodes to download and use. Learn more about Policyfiles on

the Chef Docs website.

InSpec Code to check if the file has been installed and listening on port 80.

control 'file-example-cookbook 'do

impact 1.0

title 'Test for file'

desc 'Check if the port is listening'

describe port(80) do

it { should

be_listening }

end

describe package('nginx') do

it { should be_installed }

end

end

Using Custom Resources

InSpec provides a mechanism for defining custom resources. These enable you to better describe the intended purpose of the platform you are testing. It sometimes becomes necessary to build your own resource to help you customize your infrastructure and platforms.

Chef InSpec has hundreds of resources that can specifically target features of AWS, Azure, and GCP instances. Using these resources, you can audit a wide variety of properties of your cloud infrastructure.

Resource packs are simply collections of resources that can be leveraged via InSpec profile configuration. The following InSpec profile examples show how you can use some of these resources to verify specific AWS and GCP configurations.

Failing to include cloud resource will result in an “Undefined Local Variable or Method Error for Cloud Resource”.

You can get details of all the InSpec resources here.

Example:

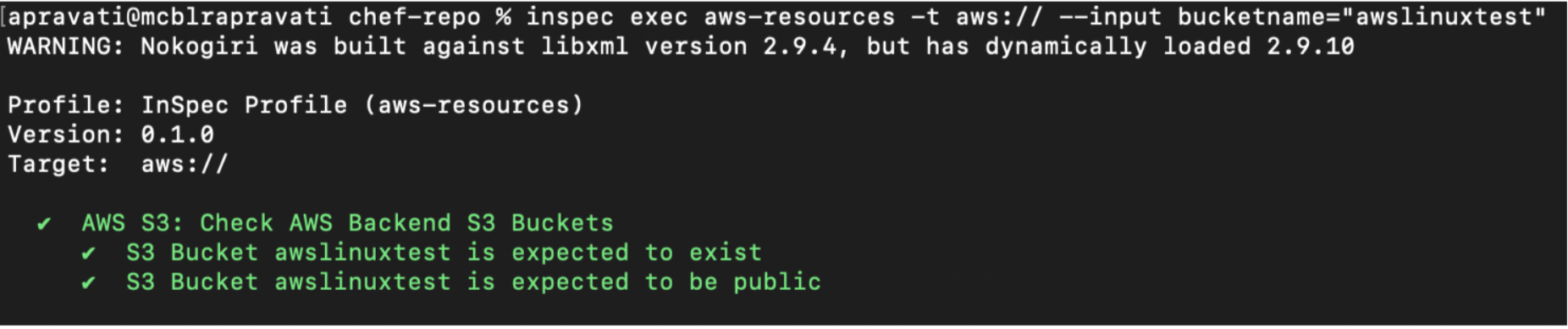

A S3 bucket awslinuxtest is created with public access. With the help if aws resource you can test if the bucket exists and is made public.

default.rb

bucketname = input('bucketname')

control 'AWS S3' do

impact 0.7

title 'Check AWS Backend S3 Buckets'

describe aws_s3_bucket(bucket_name: bucketname) do

it { should exist }

it { should be_public }

end

end

Inspec.yml file

name: aws-resources

title: InSpec Profile

maintainer: Akshay

copyright: Akshay

copyright_email: akshay@chef.com

license: Apache-2.0

summary: An InSpec Compliance Profile

version: 0.1.0

depends:

- name: inspec-aws

url: https://github.com/inspec/inspec-aws/archive/v1.21.0.tar.gz

supports:

platform: aws

attributes:

- name: bucketname

description: "akshaytest-awsbucket"

required: true

value: $DEV_BUCKET

type: string

Command to execute the test remotely.

$ inspec exec aws-resources -t aws:// --input bucketname="awslinuxtest"

Though these examples bundle configurations and tests into single recipes and profiles, in most enterprises these would likely be separated. It's a best practice to create separate profiles for each cloud infrastructure, but this post

combines them for clarity.

Create a Policyfile

A key consideration when creating any Chef cookbook is knowing that it contains code you trust. By using Policyfiles, you're creating immutable objects (bundles of cookbooks, recipes, profiles) and ensuring the code hasn't been altered in some way.

When you created the cookbook with the Chef generator command earlier, a Policyfile.rb file was automatically created inside that cookbook folder. Open the file in your editor and change it to look something like the following:

# Policyfile.rb - Describe how you want Chef Infra Client to build your system.

# For more information on the Policyfile feature, visit

# https://docs.chef.io/policyfile/

# A name that describes what the system you're building with Chef does.

name 'file-example-cookbook'

# Where to find external cookbooks:

default_source :chef_repo, '~/chef-repo/cookbooks' do |s|

s.preferred_for 'file-example-cookbook '

end

default_source :supermarket

default_source :chef_server, 'https://<automate-server-IP>.compute-1.amazonaws.com/organizations/lab'

# run_list: chef-client will run these recipes in the order specified.

run_list 'file-example-cookbook::default'

With this Policyfile.rb in place, you can bundle your cookbooks and compliance profiles and upload them to Chef Server with two short commands. The first rolls everything up and outputs a .lock.json file. The second uploads it to your Chef Infra Server.

$ chef install Policyfile.rb

$ chef push <policy_group> Policyfile.lock.json

Where:

policy_group: Auto-create or use a unique policy group for different purposes, such as build, test and prod, and associate your cookbooks and profiles with those groups.

Default value is _default.

With your nodes bootstrapped and your code pushed to the server, the next step is to assign the recipes to the nodes. You can assign them using run_lists or by setting policy groups and names.

To assign via run_list:

$ knife node run_list add

<node_name>'recipe[<cookbook_name>]'

To assign via policy:

$ knife node policy set <node_name> <policy-group> <policy_name>

$ knife node policy set myawsnode test file-example-cookbook

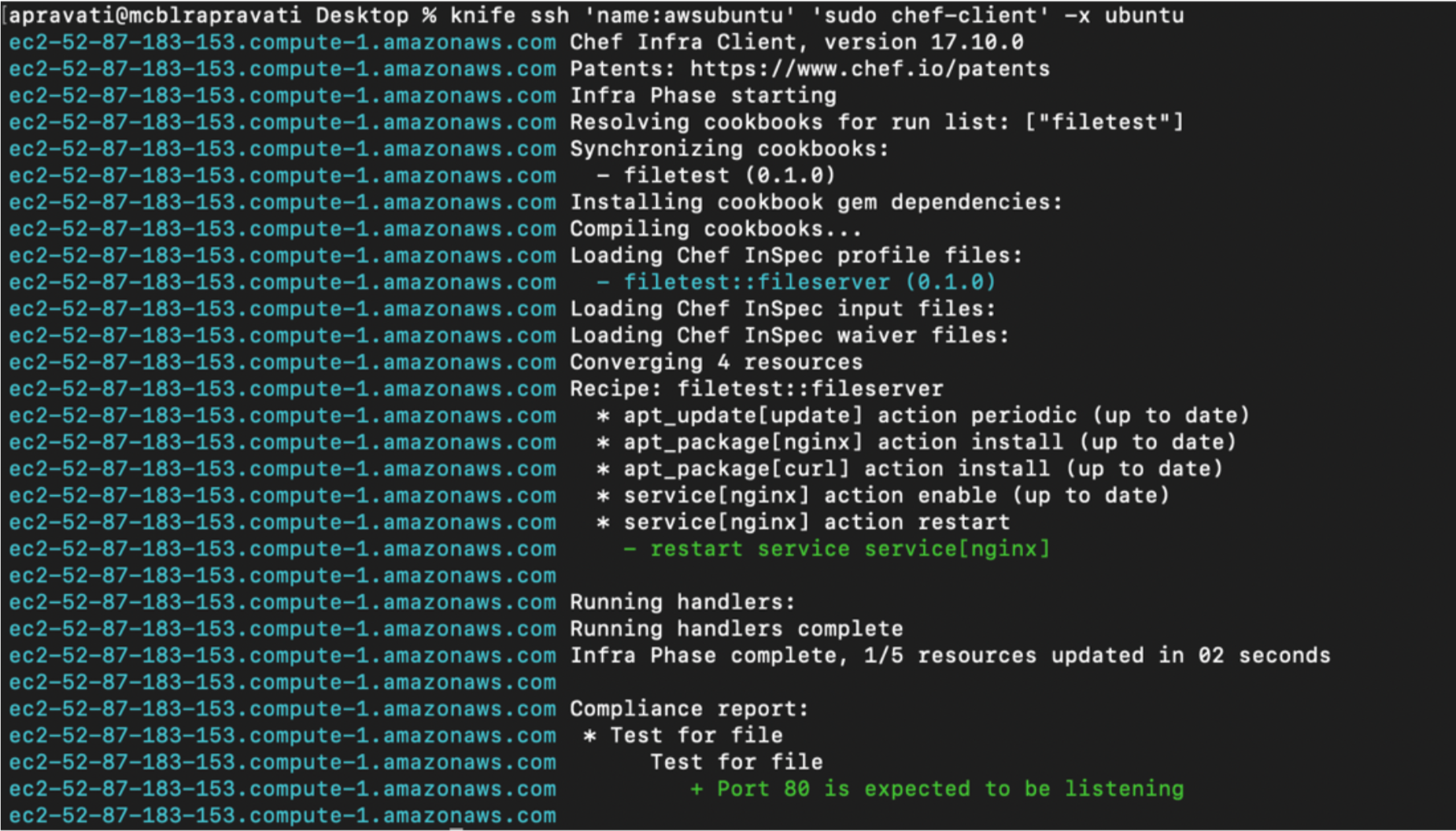

To apply your code to your nodes, you can manually login to each remote node and run sudo chef-client , or you can run the command remotely using the knife ssh command from your workstation. Chef recognizes 'name: *' as

a wildcard value for all nodes:

$ knife ssh 'name:*' 'sudo chef-client' -a <ip-address of VM>

Tip – In case you get an error “Net::SSH::AuthenticationFailed: Authentication failed for user”, while doing knife ssh make sure you add your keys to the server agent

$ ssh-add <key_name>

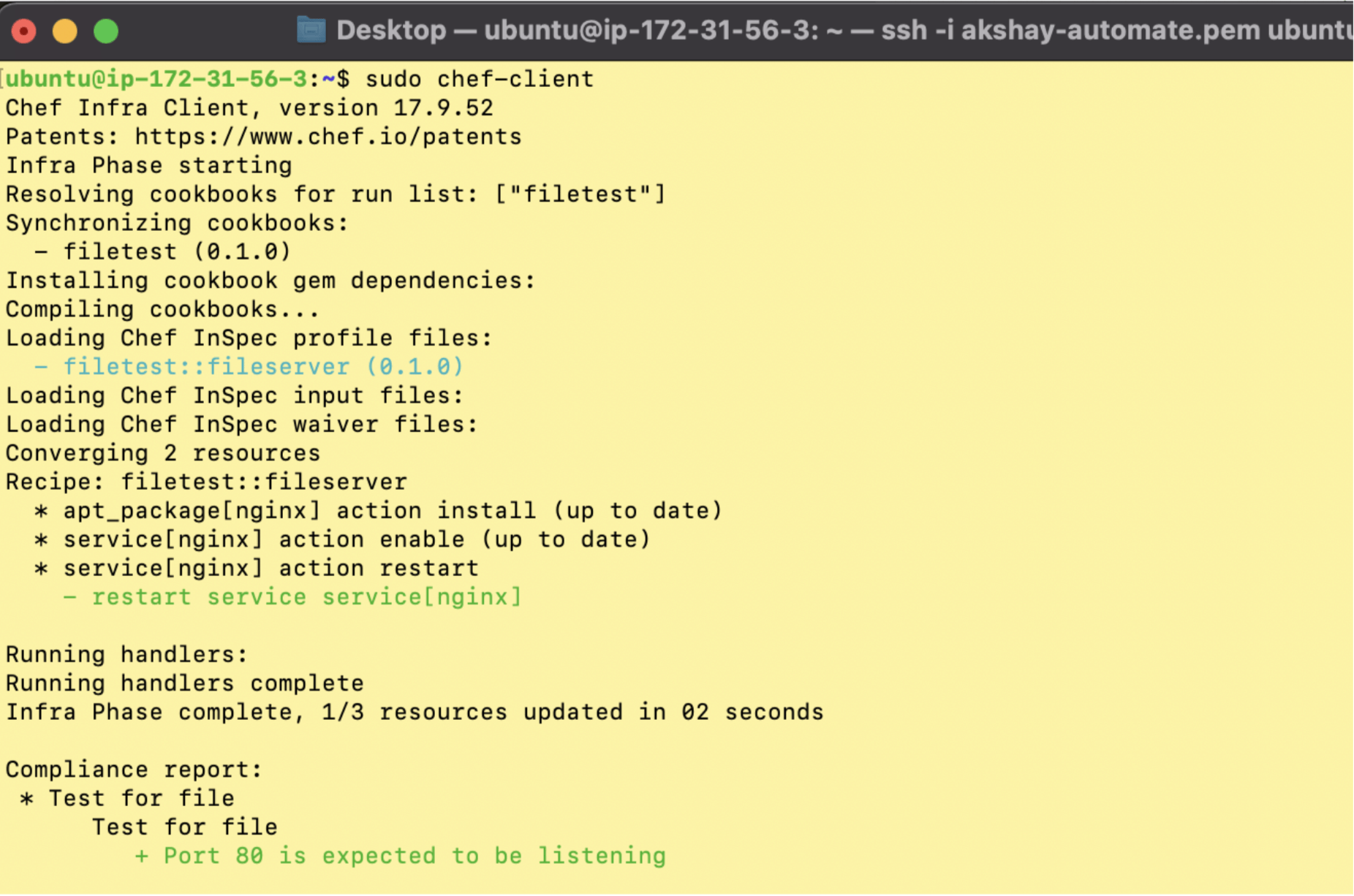

Below is an example of manually running the command “sudo chef-client” on an AWS node.

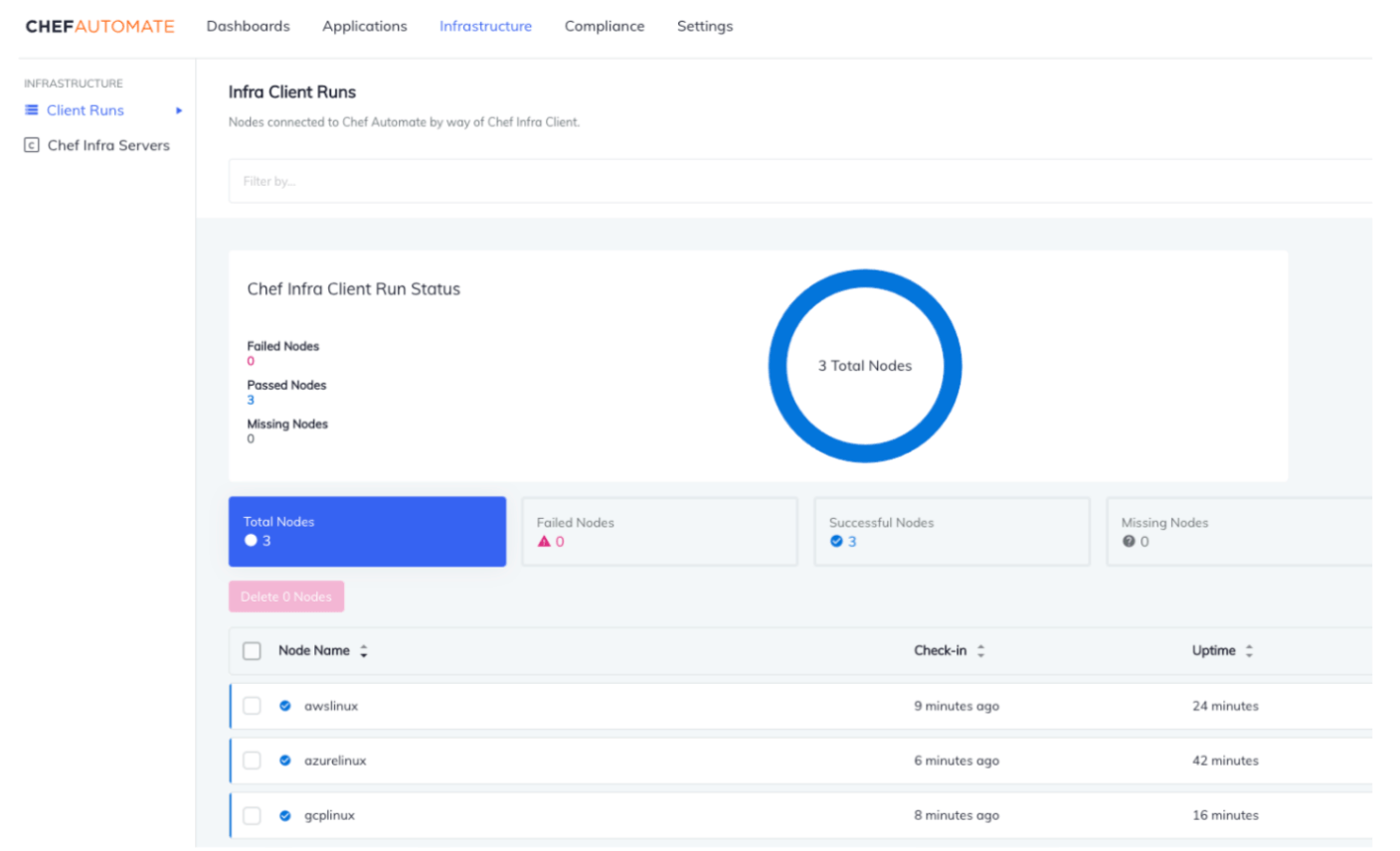

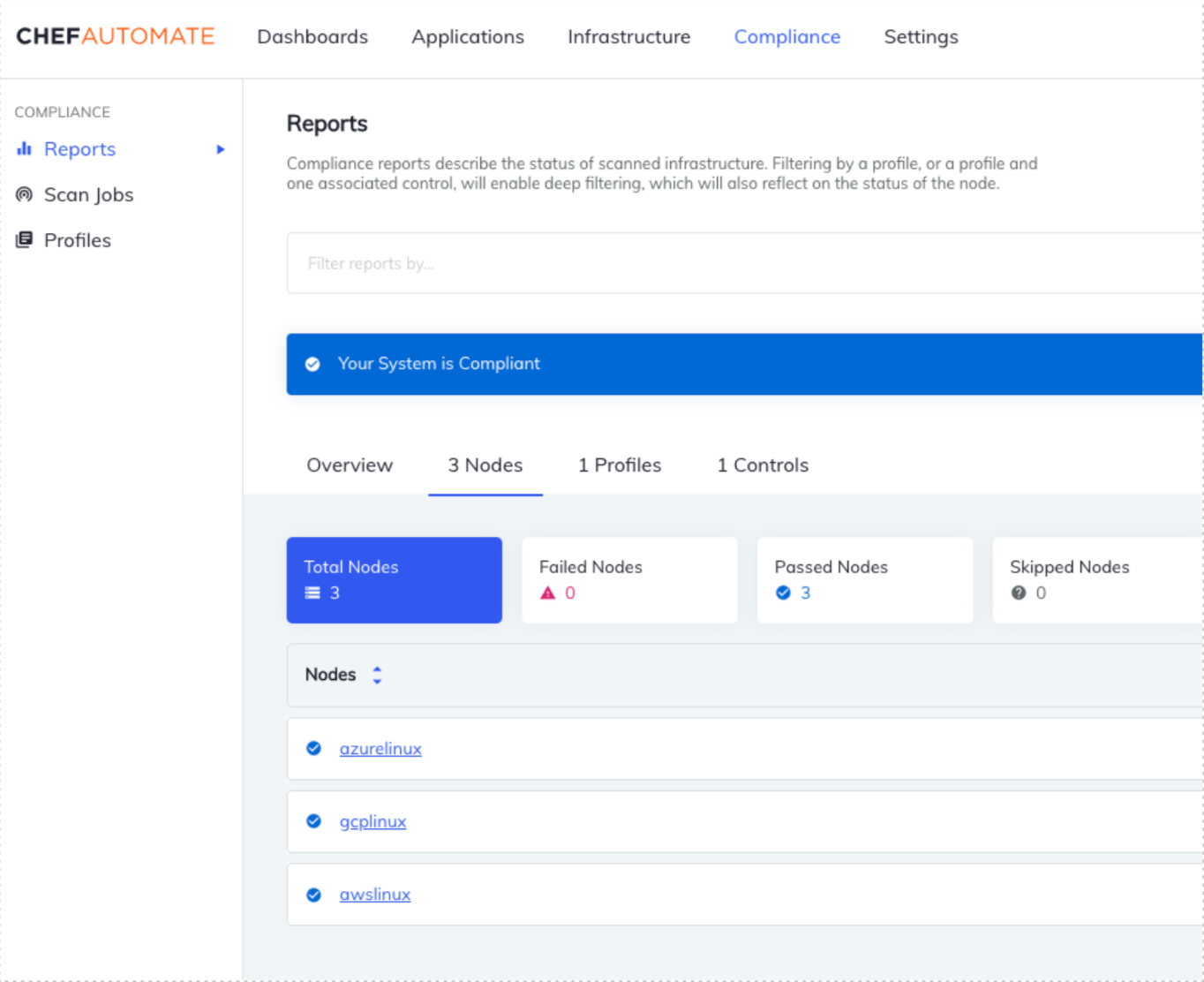

If you're running Chef Automate, you can check the results by logging into your Automate dashboard and seeing node statuses in the Infrastructure and Compliance tabs.

The infrastructure tab displays all the nodes that have been successfully bootstrapped.

The Compliance tab displays if the recipes have been successfully deployed on each node and verified with the InSpec profile.

Conclusion

This blog post explained how to use Chef Infra and Chef InSpec on multi-cloud platforms, and you should now better understand how to bootstrap a node, get chef-client installed, and configure it with Chef Infra and InSpec, which provides a powerful way to verify the security and compliance needs of your system. Hundreds of built-in Chef resources allow you to check specific properties of your cloud-based systems and add logic to manipulate each configuration differently.

Next Steps

Once you get a feel for these Chef basics, you can get into real-life scenarios by looking at the Chef Infra 101: The Road to Best Practices guide.

Resources

- Learn Chef is a great place to start your journey with workstation and InSpec

- Download Chef Workstation

- Watch a Chef InSpec training video, https://www.youtube.com/watch?v=Wg94f2Z-d9c&t=826s